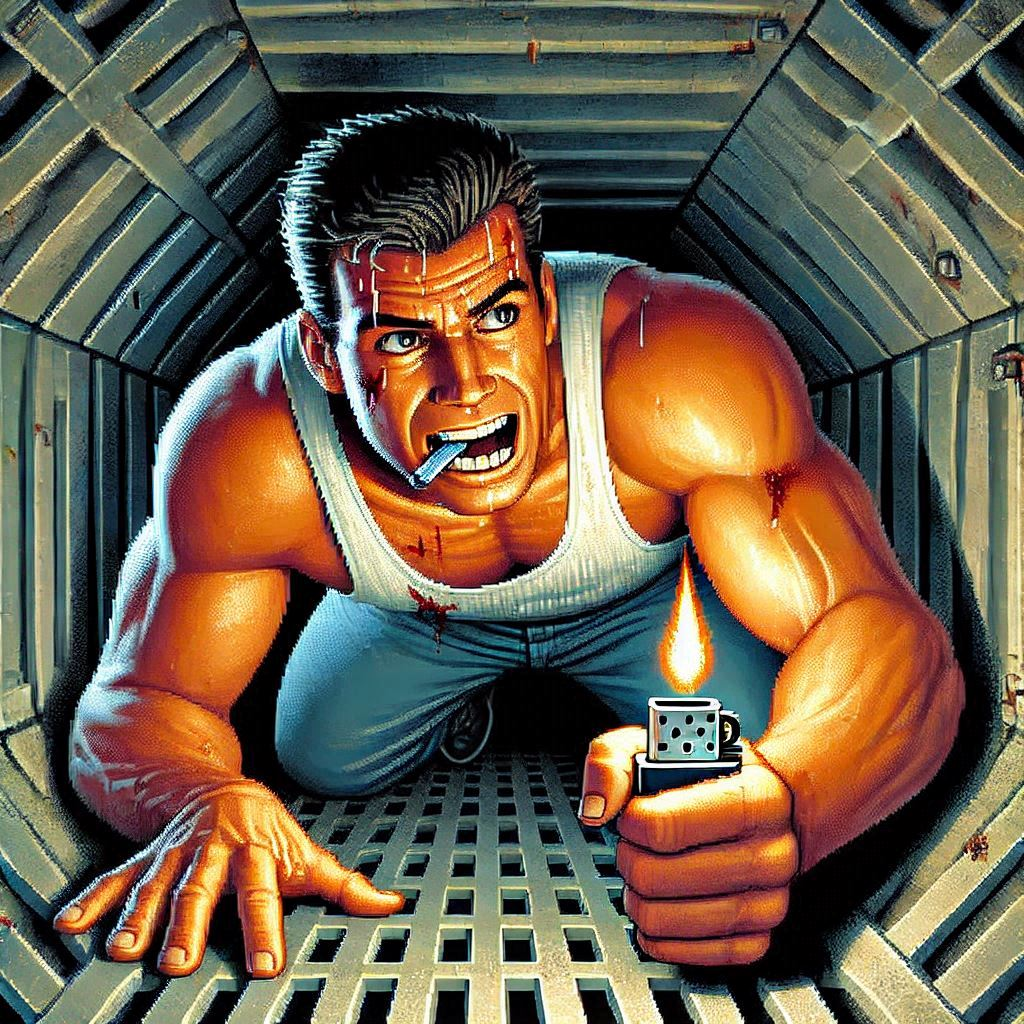

Hard to Kill: Why Auto-Increment Primary Keys Can Make Data Sync Die Harder

Working with the SyncFramework, I’ve noticed a recurring pattern when discussing schema design with customers. One crucial question that often surprises them is about their choice of primary keys: “Are you using auto-incremental integers or unique identifiers (like GUIDs)?”

Database Implementation Deep Dive

SQL Server

SQL Server uses the IDENTITY property, storing current values in system tables (sys.identity_columns) and caching them in memory for performance. During restarts, it reads the last used value from these system tables. The values are managed as 8-byte numbers internally, with new ranges allocated when the cache is exhausted.

MySQL

MySQL’s InnoDB engine maintains auto-increment counters in memory and persists them to the system tablespace or table’s .frm file. After a restart, it scans the table to find the maximum used value. Each table has its own counter stored in the metadata.

PostgreSQL

PostgreSQL takes a different approach, using separate sequence objects stored in the pg_class catalog. These sequences maintain their own relation files containing crucial metadata like last value, increment, and min/max values. The sequence data is periodically checkpointed to disk for durability.

Oracle

Oracle traditionally uses sequences and triggers, with modern versions (12c+) supporting identity columns. The sequence information is stored in the SEQ$ system table, tracking the last number used, cache size, and increment values.

The Synchronization Challenge

This diversity in implementation creates several challenges for data synchronization:

- Unpredictable Sequence Generation: Even within the same database engine, gaps can occur due to rolled-back transactions or server restarts.

- Infrastructure Dependencies: The mechanisms for generating next values are deeply embedded within each database engine and aren’t easily accessible to frameworks like Entity Framework or XPO.

- Cross-Database Complexity: When synchronizing across different database instances, coordinating auto-increment values becomes even more complex.

The GUID Alternative

Using GUIDs (Globally Unique Identifiers) as primary keys offers a solution to these synchronization challenges. While GUIDs come with their own set of considerations, they provide guaranteed uniqueness across distributed systems without requiring centralized coordination.

Traditional GUID Concerns

- Index fragmentation

- Storage size

- Performance impact

Modern Solutions

These concerns have been addressed through:

- Sequential GUID generation techniques

- Improved indexing in modern databases

- Optimizations in .NET 9

Recommendations

When designing systems that require data synchronization:

- Consider using GUIDs instead of auto-increment integers for primary keys

- Evaluate sequential GUID generation for better performance

- Understand that auto-increment values, while simple, can complicate synchronization scenarios

- Plan for the infrastructure needed to maintain consistent primary key generation across your distributed system

Conclusion

The choice of primary key strategy significantly impacts your system’s ability to handle data synchronization effectively. While auto-increment integers might seem simpler at first, understanding their implementation details across different databases reveals why GUIDs often provide a more robust solution for distributed systems.

Remember: Data synchronization is not a trivial problem, and your primary key strategy plays a crucial role in its success. Take the time to evaluate your requirements and choose the appropriate approach for your specific use case.

Till next time, happy delta encoding.