by Joche Ojeda | May 24, 2024 | CPU

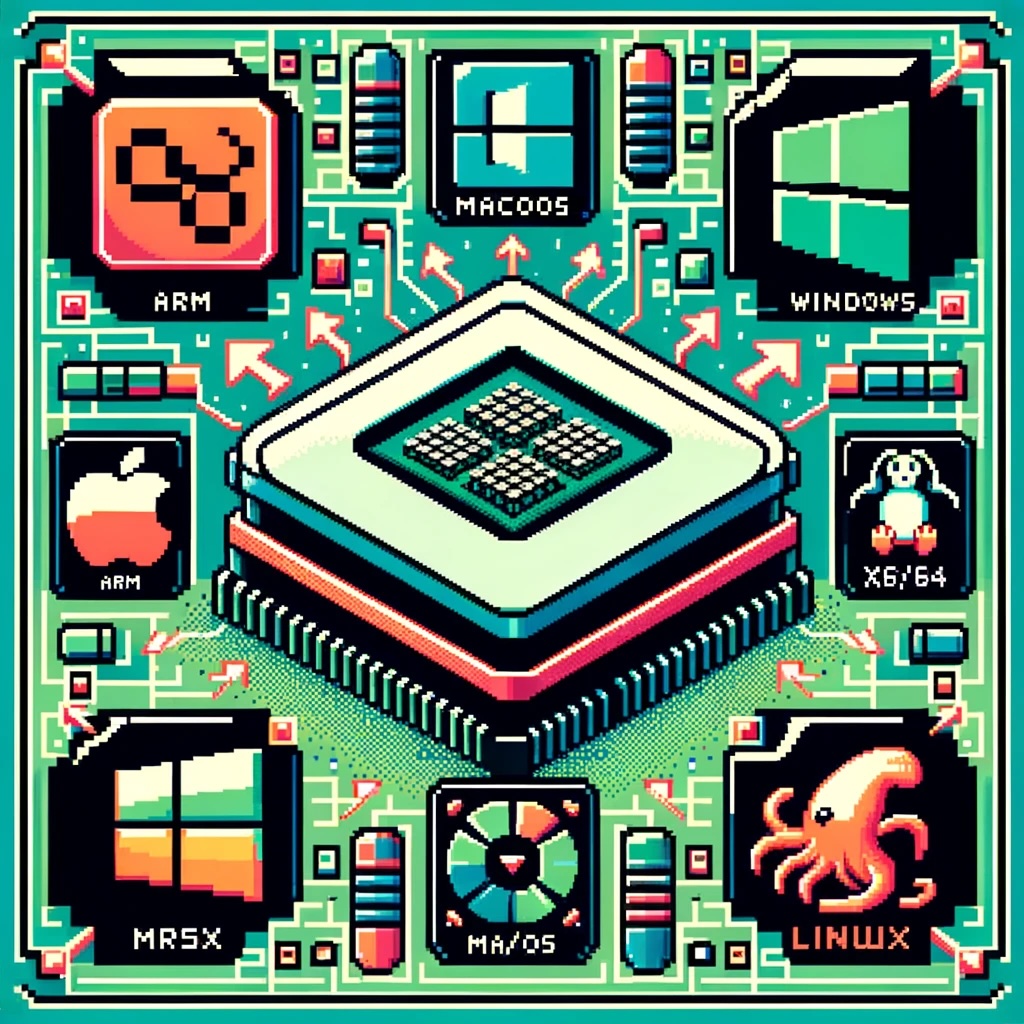

As technology continues to evolve, the need for seamless interoperability between different hardware architectures becomes increasingly crucial. One significant aspect of this interoperability is the ability to run software compiled for one CPU architecture on another. This blog post explores how CPU translation layers enable the execution of ARM-compiled applications on x86/x64 platforms across Windows, macOS, and Linux.

Windows OS: Bridging ARM and x86/x64

Microsoft’s approach to running ARM applications on x86/x64 hardware is embodied in Windows 10 on ARM. This system allows ARM-based devices to run Windows efficiently, incorporating several key technologies:

- WOW (Windows on Windows): This subsystem provides compatibility for 32-bit x86 applications on ARM devices through a mix of emulation and native execution.

- x86/x64 Emulation: Windows 10 and 11 on ARM can emulate both x86 and x64 applications. The emulation layer dynamically translates x86/x64 instructions to ARM instructions at runtime, using Just-In-Time (JIT) compilation techniques to convert code as it is needed.

- Native ARM64 Support: To avoid the performance overhead associated with emulation, Microsoft encourages developers to compile their applications directly for ARM64.

macOS: The Power of Rosetta 2

Apple’s transition from Intel (x86/x64) to Apple Silicon (ARM) has been facilitated by Rosetta 2, a sophisticated translation layer designed to make this process as smooth as possible:

- Dynamic Binary Translation: Rosetta 2 converts x86_64 instructions to ARM instructions on-the-fly, enabling users to run x86_64 applications transparently on ARM-based Macs.

- Ahead-of-Time (AOT) Compilation: For some applications, Rosetta 2 can pre-translate x86_64 binaries to ARM before execution, boosting performance.

- Universal Binaries: Apple encourages developers to use Universal Binaries, which include both x86_64 and ARM64 executables, allowing the operating system to select the appropriate version based on the hardware.

Linux: Flexibility with QEMU

Linux’s open-source nature provides a versatile approach to CPU translation through QEMU, a widely-used emulator that supports various architectures, including ARM to x86/x64:

- User-mode Emulation: QEMU can run individual Linux executables compiled for ARM on an x86/x64 host by translating system calls and CPU instructions.

- Full-system Emulation: It can also emulate a complete ARM system, enabling an x86/x64 machine to run an ARM operating system and its applications.

- Performance Enhancements: QEMU’s performance can be significantly improved with KVM (Kernel-based Virtual Machine), which allows near-native execution speed for guest instructions.

How Translation Layers Work

The translation process involves several steps to ensure smooth execution of applications across different architectures:

- Instruction Fetch: The emulator fetches instructions from the source (ARM) binary.

- Instruction Decode: The fetched instructions are decoded into a format understandable by the translation layer.

- Instruction Translation:

- JIT Compilation: Converts source instructions into target (x86/x64) instructions in real-time.

- Caching: Frequently used translations are cached to avoid repeated translation.

- Execution: The translated instructions are executed on the target CPU.

- System Calls and Libraries:

- System Call Translation: System calls from the source architecture are translated to their equivalents on the host architecture.

- Library Mapping: Shared libraries from the source architecture are mapped to their counterparts on the host system.

Performance Considerations

- Overhead: Emulation introduces overhead, which can impact performance, particularly for compute-intensive applications.

- Optimization Strategies: Techniques like ahead-of-time compilation, caching, and promoting native support help mitigate performance penalties.

- Hardware Support: Some ARM processors include hardware extensions to accelerate binary translation.

Developer Considerations

For developers, ensuring compatibility and performance across different architectures involves several best practices:

- Cross-Compilation: Developers should compile their applications for multiple architectures to provide native performance on each platform.

- Extensive Testing: Applications must be tested thoroughly in both native and emulated environments to ensure compatibility and performance.

Conclusion

CPU translation layers are pivotal for maintaining software compatibility across different hardware architectures. By leveraging sophisticated techniques such as dynamic binary translation, JIT compilation, and system call translation, these layers bridge the gap between ARM and x86/x64 architectures on Windows, macOS, and Linux. As technology continues to advance, these translation layers will play an increasingly important role in enabling seamless interoperability across diverse computing environments.

by Joche Ojeda | May 23, 2024 | CPU

The ARM, x86, and Itanium CPU architectures each have unique characteristics that impact .NET developers. Understanding how these architectures affect your code, along with the importance of using appropriate NuGet packages, is crucial for developing efficient and compatible applications.

ARM Architecture and .NET Development

1. Performance and Optimization:

- Energy Efficiency: ARM processors are known for their power efficiency, benefiting .NET applications on devices like mobile phones and tablets with longer battery life and reduced thermal output.

- Performance: ARM processors may exhibit different performance characteristics compared to x86 processors. Developers need to optimize their code to ensure efficient execution on ARM architecture.

2. Cross-Platform Development:

- .NET Core and .NET 5+: These versions support cross-platform development, allowing code to run on Windows, macOS, and Linux, including ARM-based versions.

- Compatibility: Ensuring .NET applications are compatible with ARM devices may require testing and modifications to address architecture-specific issues.

3. Tooling and Development Environment:

- Visual Studio and Visual Studio Code: Both provide support for ARM development, though there may be differences in features and performance compared to x86 environments.

- Emulators and Physical Devices: Testing on actual ARM hardware or using emulators helps identify performance bottlenecks and compatibility issues.

x86 Architecture and .NET Development

1. Performance and Optimization:

- Processing Power: x86 processors are known for high performance and are widely used in desktops, servers, and high-end gaming.

- Instruction Set Complexity: The complex instruction set of x86 (CISC) allows for efficient execution of certain tasks, which can differ from ARM’s RISC approach.

2. Compatibility:

- Legacy Applications: x86’s extensive history means many enterprise and legacy applications are optimized for this architecture.

- NuGet Packages: Ensuring that NuGet packages target x86 or are architecture-agnostic is crucial for maintaining compatibility and performance.

3. Development Tools:

- Comprehensive Support: x86 development benefits from mature tools and extensive resources available in Visual Studio and other IDEs.

Itanium Architecture and .NET Development

1. Performance and Optimization:

- High-End Computing: Itanium processors were designed for high-end computing tasks, such as large-scale data processing and enterprise servers.

- EPIC Architecture: Itanium uses Explicitly Parallel Instruction Computing (EPIC), which requires different optimization strategies compared to x86 and ARM.

2. Limited Support:

- Niche Market: Itanium has a smaller market presence, primarily in enterprise environments.

- .NET Support: .NET support for Itanium is limited, requiring careful consideration of architecture-specific issues.

CPU Architecture and Code Impact

1. Instruction Sets and Performance:

- Differences: x86 (CISC), ARM (RISC), and Itanium (EPIC) have different instruction sets, affecting code efficiency. Optimizations effective on one architecture might not work well on another.

- Compiler Optimizations: .NET compilers optimize code for specific architectures, but understanding the underlying architecture helps write more efficient code.

2. Multi-Platform Development:

-

- Conditional Compilation: .NET supports conditional compilation for architecture-specific code optimizations.

#if ARM

// ARM-specific code

#elif x86

// x86-specific code

#elif Itanium

// Itanium-specific code

#endif

- Libraries and Dependencies: Ensure all libraries and dependencies in your .NET project are compatible with the target CPU architecture. Use NuGet packages that are either architecture-agnostic or specifically target your architecture.

3. Debugging and Testing:

- Architecture-Specific Bugs: Bugs may manifest differently across ARM, x86, and Itanium. Rigorous testing on all target architectures is essential.

- Performance Testing: Conduct performance testing on each architecture to identify and resolve any specific issues.

Supported CPU Architectures in .NET

1. .NET Core and .NET 5+:

- x86 and x64: Full support for 32-bit and 64-bit x86 architectures across all major operating systems.

- ARM32 and ARM64: Support for 32-bit and 64-bit ARM architectures, including Windows on ARM, Linux on ARM, and macOS on ARM (Apple Silicon).

- Itanium: Limited support, mainly in specific enterprise scenarios.

2. .NET Framework:

- x86 and x64: Primarily designed for Windows, the .NET Framework supports both 32-bit and 64-bit x86 architectures.

- Limited ARM and Itanium Support: The traditional .NET Framework has limited support for ARM and Itanium, mainly for older devices and specific enterprise applications.

3. .NET MAUI and Xamarin:

- Mobile Development: .NET MAUI (Multi-platform App UI) and Xamarin provide extensive support for ARM architectures, targeting Android and iOS devices which predominantly use ARM processors.

Using NuGet Packages

1. Architecture-Agnostic Packages:

- Compatibility: Use NuGet packages that are agnostic to CPU architecture whenever possible. These packages are designed to work across different architectures without modification.

- Example: Common libraries like Newtonsoft.Json, which work across ARM, x86, and Itanium.

2. Architecture-Specific Packages:

- Performance: For performance-critical applications, use NuGet packages optimized for the target architecture.

- Example: Graphics processing libraries optimized for x86 may need alternatives for ARM or Itanium.

Conclusion

For .NET developers, understanding the impact of ARM, x86, and Itanium architectures is essential for creating efficient, cross-platform applications. The differences in CPU architectures affect performance, compatibility, and optimization strategies. By leveraging cross-platform capabilities of .NET, using appropriate NuGet packages, and testing thoroughly on all target architectures, developers can ensure their applications run smoothly across ARM, x86, and Itanium devices.

by Joche Ojeda | May 23, 2024 | CPU

The world of CPU architectures is diverse, with ARM and x86 standing out as two of the most prominent types. Each architecture has its unique design philosophy, use cases, and advantages. This article delves into the intricacies of ARM and x86 architectures, their applications, key differences, and highlights an area where x86 holds a distinct advantage over ARM.

ARM Architecture

Design Philosophy:

ARM (Advanced RISC Machines) follows the RISC (Reduced Instruction Set Computer) architecture. This design philosophy emphasizes simplicity and efficiency, using a smaller, more optimized set of instructions. The goal is to execute instructions quickly by keeping them simple and minimizing complexity.

Applications:

- Mobile Devices: ARM processors dominate the smartphone and tablet markets due to their energy efficiency, which is crucial for battery-operated devices.

- Embedded Systems: Widely used in various embedded systems like smart appliances, automotive applications, and IoT devices.

- Servers and PCs: ARM is making inroads into server and desktop markets with products like Apple’s M1/M2 chips and some data center processors.

Instruction Set:

ARM uses simple and uniform instructions, which generally take a consistent number of cycles to execute. This simplicity enhances performance in specific applications and simplifies processor design.

Performance:

- Power Consumption: ARM’s design focuses on lower power consumption, translating to longer battery life for portable devices.

- Scalability: ARM cores can be scaled up or down easily, making them versatile for applications ranging from small sensors to powerful data center processors.

x86 Architecture

Design Philosophy:

x86 follows the CISC (Complex Instruction Set Computer) architecture. This approach includes a larger set of more complex instructions, allowing for more direct implementation of high-level language constructs and potentially fewer instructions per program.

Applications:

- Personal Computers: x86 processors are the standard in desktop and laptop computers, providing high performance for a broad range of applications.

- Servers: Widely used in servers and data centers due to their powerful processing capabilities and extensive software ecosystem.

- Workstations and Gaming: Favored in workstations and gaming PCs for their high performance and compatibility with a wide range of software.

Instruction Set:

The x86 instruction set is complex and varied, capable of performing multiple operations within a single instruction. This complexity can lead to more efficient execution of certain tasks but requires more transistors and power.

Performance:

- Processing Power: x86 processors are known for their high performance and ability to handle intensive computing tasks, such as gaming, video editing, and large-scale data processing.

- Power Consumption: Generally consume more power compared to ARM processors, which can be a disadvantage in mobile or embedded applications.

Key Differences Between ARM and x86

- Instruction Set Complexity:

- ARM: Uses a RISC architecture with a smaller, simpler set of instructions.

- x86: Uses a CISC architecture with a larger, more complex set of instructions.

- Power Efficiency:

- ARM: Designed to be power-efficient, making it ideal for battery-operated devices.

- x86: Generally consumes more power, which is less of an issue in desktops and servers but can be a drawback in mobile environments.

- Performance and Applications:

- ARM: Suited for energy-efficient and mobile applications but increasingly capable in desktops and servers (e.g., Apple M1/M2).

- x86: Suited for high-performance computing tasks in desktops, workstations, and servers, with a long history of extensive software support.

- Market Presence:

- ARM: Dominates the mobile and embedded markets, with growing presence in desktops and servers.

- x86: Dominates the desktop, laptop, and server markets, with a rich legacy and extensive software ecosystem.

An Area Where x86 Excels: High-End PC Gaming and Specialized Software

One key area where x86 can perform tasks that ARM typically cannot (or does so with more difficulty) is in running legacy software that was specifically designed for x86 architectures. This is particularly evident in high-end PC gaming and specialized software.

High-End PC Gaming:

- Compatibility with Legacy Games:

- Many high-end PC games, especially older ones, are optimized specifically for x86 architecture. Games like “The Witcher 3” or “Crysis” were designed to leverage the architecture and instruction sets provided by x86 CPUs.

- These games often make extensive use of the complex instructions available on x86 processors, which can directly translate to better performance and higher frame rates on x86 hardware compared to ARM.

- Graphics and Physics Engines:

- Engines such as Unreal Engine or Unity are traditionally optimized for x86 architectures, making the most of its processing power for complex calculations, realistic physics, and detailed graphics rendering.

- Advanced features like real-time ray tracing, high-resolution textures, and complex AI calculations tend to perform better on x86 systems due to their raw processing power and extensive optimization for the architecture.

Specialized Software:

- Enterprise Software and Legacy Applications:

- Many enterprise applications, such as older versions of Microsoft Office, Adobe Creative Suite, or proprietary business applications, are built specifically for x86 and may not run natively on ARM processors without emulation.

- While ARM processors can emulate x86 instructions, this often comes with a performance penalty. This is evident in cases where businesses rely on legacy software that performs crucial tasks but is not available or optimized for ARM.

- Professional Tools:

- Professional software such as AutoCAD, certain versions of MATLAB, or legacy database management systems (like some older Oracle Database setups) are heavily optimized for x86.

- These tools often use x86-specific optimizations and plugins that may not have ARM equivalents, leading to suboptimal performance or compatibility issues when running on ARM.

Conclusion

ARM and x86 architectures each have their strengths and are suited to different applications. ARM’s power efficiency and scalability make it ideal for mobile devices and embedded systems, while x86’s processing power and extensive software ecosystem make it the go-to choice for desktops, servers, and high-end computing tasks. Understanding these differences is crucial for selecting the right architecture for your specific needs, particularly when considering the performance of legacy and specialized software.

by Joche Ojeda | May 22, 2024 | A.I

A New Era of Computing: AI-Powered Devices Over Form Factor Innovations

In a recent Microsoft event, the spotlight was on a transformative innovation that highlights the power of AI over the constant pursuit of new device form factors. The unveiling of the new Surface computer, equipped with a Neural Processing Unit (NPU), demonstrates that enhancing existing devices with AI capabilities is more impactful than creating entirely new device types.

The Microsoft Event: Revolutionizing with AI

Microsoft showcased the new Surface computer, integrating an NPU that enhances performance by enabling real-time processing of AI algorithms on the device. This approach allows for advanced capabilities like enhanced voice recognition, real-time language translation, and sophisticated image processing, without relying on cloud services.

Why AI Integration Trumps New Form Factors

For years, the tech industry has focused on new device types, from tablets to foldable screens, often addressing problems that didn’t exist. However, the true advancement lies in making existing devices smarter. AI integration offers:

- Enhanced Productivity: Automating repetitive tasks and providing intelligent suggestions, allowing users to focus on more complex and creative work.

- Personalized Experience: Devices learn and adapt to user preferences, offering a highly customized experience.

- Advanced Capabilities: NPUs enable local processing of complex AI models, reducing latency and dependency on the cloud.

- Seamless Integration: AI creates a cohesive and efficient workflow across various applications and services.

Comparing to Humane Pin and Rabbit AI Devices

While devices like the Humane Pin and Rabbit AI offer innovative new form factors, they often rely heavily on cloud connectivity for AI functions. In contrast, the Surface’s NPU allows for faster, more secure local processing. This means tasks are completed quicker and more securely, as data doesn’t need to be sent to the cloud.

Conclusion: Embracing AI-Driven Innovation

Microsoft’s AI-enhanced Surface computer signifies a shift towards intelligent augmentation rather than just physical redesign. By embedding AI within existing devices, we unlock new potentials for efficiency, personalization, and functionality, setting a new standard for future tech innovations. This approach not only makes interactions with technology smarter and more intuitive but also emphasizes the importance of on-device processing power for a faster and more secure user experience.

For more information and to pre-order the new Surface laptops, visit Microsoft’s official store.

by Joche Ojeda | May 19, 2024 | A.I

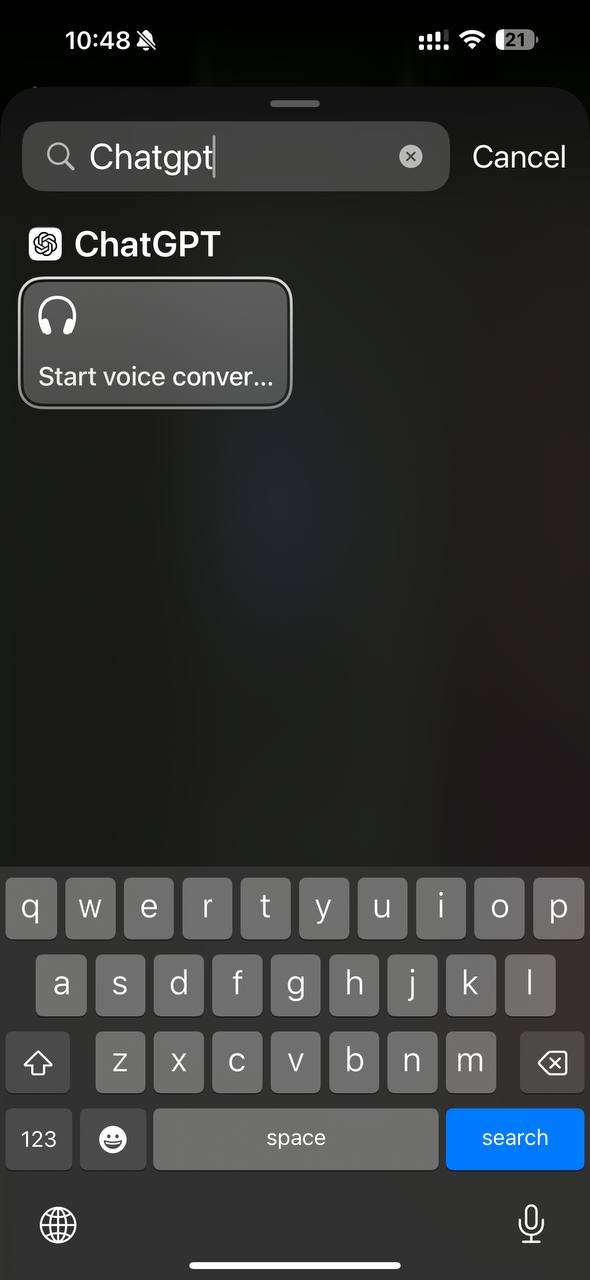

OpenAI’s ChatGPT and Microsoft’s Copilot are two powerful AI tools that have revolutionized the way we interact with technology. While both are designed to assist users in various tasks, they each have unique features that set them apart.

OpenAI’s ChatGPT

ChatGPT, developed by OpenAI, is a large language model chatbot capable of communicating with users in a human-like way¹⁷. It can answer questions, create recipes, write code, and offer advice¹⁷. It uses a powerful generative AI model and has access to several tools which it can use to complete tasks²⁶.

Key Features of ChatGPT

- Chat with Images: You can show ChatGPT images and start a chat.

- Image Generation: Create images simply by describing them in ChatGPT.

- Voice Chat: You can now use voice to engage in a back-and-forth conversation with ChatGPT.

- Web Browsing: Gives ChatGPT the ability to search the internet for additional information.

- Advanced Data Analysis: Interact with data documents (Excel, CSV, JSON).

Microsoft’s Copilot

Microsoft’s Copilot is an AI companion that works everywhere you do and intelligently adapts to your needs. It can chat with text, voice, and image capabilities, summarize documents and web pages, create images, and use plugins and Copilot GPTs

Key Features of Copilot

- Chat with Text, Voice, and Image Capabilities: Copilot includes chat with text, voice, and image capabilities/

- Summarization of Documents and Web Pages: It can summarize documents and web pages.

- Image Creation: Copilot can create images.

- Web Grounding: It can ground information from the web.

- Use of Plugins and Copilot GPTs: Copilot can use plugins and Copilot GPTs.

Comparison of Mobile App Features

| Feature |

OpenAI’s ChatGPT |

Microsoft’s Copilot |

| Chat with Text |

Yes |

Yes |

| Voice Input |

Yes |

Yes |

| Image Capabilities |

Yes |

Yes |

| Summarization |

No |

Yes |

| Image Creation |

Yes |

Yes |

| Web Grounding |

No |

Yes |

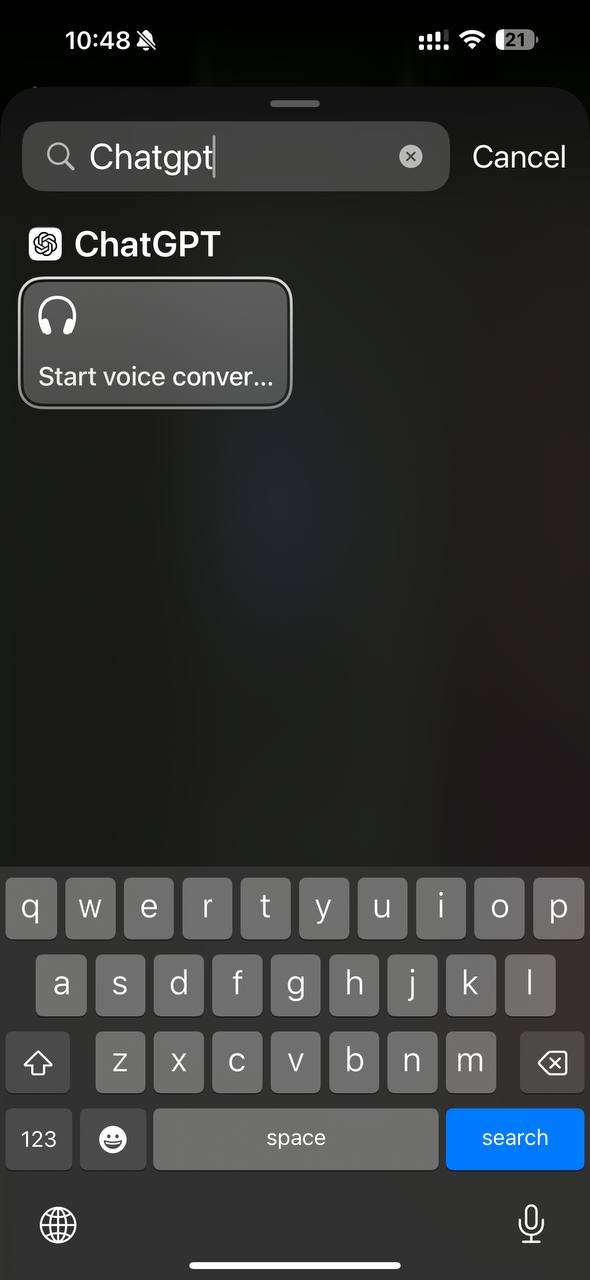

What makes the difference, the action button for the iPhone

The action button on iPhones, available on the iPhone 15 Pro and later models, is a customizable button for quick tasks. By default, it opens the camera or activates the flashlight. However, users can customize it to perform various actions, including launching a specific app. When set to launch an app, pressing the action button will instantly open the chosen app, such as the ChatGPT voice interface. This integration is further enhanced by the new ChatGPT-4.0 capabilities, which offer more accurate responses, better understanding of context, and faster processing times. This makes voice interactions with ChatGPT smoother and more efficient, allowing users to quickly and effectively communicate with the AI.

|

|

The ChatGPT voice interface is one of my favorite features, but there’s one thing missing for it to be perfect. Currently, you can’t send pictures or videos during a voice conversation. The workaround is to leave the voice interface, open the chat interface, find the voice conversation in the chat list, and upload the picture there. However, this brings another problem: you can’t return to the voice interface and continue the previous voice conversation.

Microsoft Copilot, if you are reading this, when will you add a voice interface? And when you finally do it, don’t forget to add the picture and video feature I want. That is all for my wishlist.

by Joche Ojeda | May 15, 2024 | C#, dotnet, Linux, Ubuntu, WSL

Hello, dear readers! Today, we’re going to talk about something called the Windows Subsystem for Linux, or WSL for short. Now, don’t worry if you’re not a tech wizard – this guide is meant to be approachable for everyone!

What is WSL?

In simple terms, WSL is a feature in Windows that allows you to use Linux right within your Windows system. Think of it as having a little bit of Linux magic right in your Windows computer!

Why Should I Care?

Well, WSL is like having a Swiss Army knife on your computer. It can make certain tasks easier and faster, and it can even let you use tools that were previously only available on Linux.

Is It Hard to Use?

Not at all! If you’ve ever used the Command Prompt on your Windows computer, then you’re already halfway there. And even if you haven’t, there are plenty of easy-to-follow guides out there to help you get started.

Do I Need to Be a Computer Expert to Use It?

Absolutely not! While WSL is a powerful tool that many developers love to use, it’s also quite user-friendly. With a bit of curiosity and a dash of patience, anyone can start exploring the world of WSL.

As a DotNet developer, you might be wondering why there’s so much buzz around the Windows Subsystem for Linux (WSL). Let’s dive into the reasons why WSL could be a game-changer for you.

- Seamless Integration: WSL provides a full-fledged Linux environment right within your Windows system. This means you can run Linux commands and applications without needing a separate machine or dual-boot setup.

- Development Environment Consistency: With WSL, you can maintain consistency between your development and production environments, especially if your applications are deployed on Linux servers. This can significantly reduce the “it works on my machine” syndrome.

- Access to Linux-Only Tools: Some tools and utilities are only available or work better on Linux. WSL brings these tools to your Windows desktop, expanding your toolkit without additional overhead.

- Improved Performance: WSL 2, the latest version, runs a real Linux kernel inside a lightweight virtual machine (VM), which leads to faster file system performance and complete system call compatibility.

- Docker Support: WSL 2 provides full Docker support without requiring additional layers for translation between Windows and Linux, resulting in a more efficient and seamless Docker experience.

In conclusion, WSL is not just a fancy tool; it’s a powerful ally that can enhance your productivity and capabilities as a DotNet developer.

by Joche Ojeda | May 15, 2024 | Data Synchronization, EfCore

I’m happy to announce the new version of SyncFramework for EfCore, targeting net8.0 and referencing EfCore 8 nugets as follow

- EfCore Postgres Version: 8.0.4

- EfCore PomeloMysql Version: 8.0.2

- EfCore Sqlite Version: 8.0.5

- EfCore SqlServer Version: 8.0.5

You can download the new versions from Nuget.org and check the repo here

Happy synchronization everyone!!!!

by Joche Ojeda | May 14, 2024 | C#, Data Synchronization, dotnet

Hello there! Today, we’re going to delve into the fascinating world of design patterns. Don’t worry if you’re not a tech whiz – we’ll keep things simple and relatable. We’ll use the SyncFramework as an example, but our main focus will be on the design patterns themselves. So, let’s get started!

What are Design Patterns?

Design patterns are like blueprints – they provide solutions to common problems that occur in software design. They’re not ready-made code that you can directly insert into your program. Instead, they’re guidelines you can follow to solve a particular problem in a specific context.

SOLID Design Principles

One of the most popular sets of design principles is SOLID. It’s an acronym that stands for five principles that help make software designs more understandable, flexible, and maintainable. Let’s break it down:

- Single Responsibility Principle: A class should have only one reason to change. In other words, it should have only one job.

- Open-Closed Principle: Software entities should be open for extension but closed for modification. This means we should be able to add new features or functionality without changing the existing code.

- Liskov Substitution Principle: Subtypes must be substitutable for their base types. This principle is about creating new derived classes that can replace the functionality of the base class without breaking the application.

- Interface Segregation Principle: Clients should not be forced to depend on interfaces they do not use. This principle is about reducing the side effects and frequency of required changes by splitting the software into multiple, independent parts.

- Dependency Inversion Principle: High-level modules should not depend on low-level modules. Both should depend on abstractions. This principle allows for decoupling.

Applying SOLID Principles in SyncFramework

The SyncFramework is a great example of how these principles can be applied. Here’s how:

- Single Responsibility Principle: Each component of the SyncFramework has a specific role. For instance, one component is responsible for tracking changes, while another handles conflict resolution.

- Open-Closed Principle: The SyncFramework is designed to be extensible. You can add new data sources or change the way data is synchronized without modifying the core framework.

- Liskov Substitution Principle: The SyncFramework uses base classes and interfaces that allow for substitutable components. This means you can replace or modify components without affecting the overall functionality.

- Interface Segregation Principle: The SyncFramework provides a range of interfaces, allowing you to choose the ones you need and ignore the ones you don’t.

- Dependency Inversion Principle: The SyncFramework depends on abstractions, not on concrete classes. This makes it more flexible and adaptable to changes.

And that’s a wrap for today! But don’t worry, this is just the beginning. In the upcoming series of articles, we’ll dive deeper into each of these principles. We’ll explore how they’re applied in the source code of the SyncFramework, providing real-world examples to help you understand these concepts better. So, stay tuned for more exciting insights into the world of design patterns! See you in the next article!

Related articles

If you want to learn more about data synchronization you can checkout the following blog posts:

- Data synchronization in a few words – https://www.jocheojeda.com/2021/10/10/data-synchronization-in-a-few-words/

- Parts of a Synchronization Framework – https://www.jocheojeda.com/2021/10/10/parts-of-a-synchronization-framework/

- Let’s write a Synchronization Framework in C# – https://www.jocheojeda.com/2021/10/11/lets-write-a-synchronization-framework-in-c/

- Synchronization Framework Base Classes – https://www.jocheojeda.com/2021/10/12/synchronization-framework-base-classes/

- Planning the first implementation – https://www.jocheojeda.com/2021/10/12/planning-the-first-implementation/

- Testing the first implementation – https://youtu.be/l2-yPlExSrg

- Adding network support – https://www.jocheojeda.com/2021/10/17/syncframework-adding-network-support/

by Joche Ojeda | Apr 29, 2024 | Semantic Kernel

Welcome to the fascinating world of artificial intelligence (AI)! You’ve probably heard about AI’s incredible potential to transform our lives, from smart assistants in our homes to self-driving cars. But have you ever wondered how all these intelligent systems communicate and work together? That’s where something called “Semantic Kernel Connectors” comes in.

Imagine you’re organizing a big family reunion. To make it a success, you need to coordinate with various family members, each handling different tasks. Similarly, in the world of AI, different parts need to communicate and work in harmony. Semantic Kernel Connectors are like the family members who help pass messages and coordinate tasks to ensure everything runs smoothly.

These connectors are a part of a larger system known as the Semantic Kernel framework. They act as messengers, allowing different AI models and external systems, like databases, to talk to each other. This communication is crucial because it lets AI systems perform complex tasks, such as sending emails or updating records, just like a helpful assistant.

For developers, these connectors are a dream come true. They make it easier to create AI applications that can understand and respond to us just like a human would. With these tools, developers can build more sophisticated AI agents that can automate tasks and even learn from their interactions, here is a list of what you get out of the box.

Core Plugins Overview

- ConversationSummaryPlugin: Summarizes conversations to provide quick insights.

- FileIOPlugin: Reads and writes to the filesystem, essential for managing data.

- HttpPlugin: Calls APIs, which allows the AI to interact with web services.

- MathPlugin: Performs mathematical operations, handy for calculations.

- TextMemoryPlugin: Stores and retrieves text in memory, useful for recalling information.

- TextPlugin: Manipulates text strings deterministically, great for text processing.

- TimePlugin: Acquires time of day and other temporal information, perfect for time-related tasks.

- WaitPlugin: Pauses execution for a specified amount of time, useful for scheduling.

So, next time you ask your smart device to play your favorite song or remind you of an appointment, remember that there’s a whole network of AI components working together behind the scenes, thanks to Semantic Kernel Connectors. They’re the unsung heroes making our daily interactions with AI seamless and intuitive.

Isn’t it amazing how far technology has come? And the best part is, we’re just getting started. As AI continues to evolve, we can expect even more incredible advancements that will make our lives easier and more connected. So, let’s embrace this journey into the future, hand in hand with AI.

by Joche Ojeda | Apr 28, 2024 | A.I

Introduction to Semantic Kernel

Hey there, fellow curious minds! Let’s talk about something exciting today—Semantic Kernel. But don’t worry, we’ll keep it as approachable as your favorite coffee shop chat.

What Exactly Is Semantic Kernel?

Imagine you’re in a magical workshop, surrounded by tools. Well, Semantic Kernel is like that workshop, but for developers. It’s an open-source Software Development Kit (SDK) that lets you create AI agents. These agents aren’t secret spies; they’re little programs that can answer questions, perform tasks, and generally make your digital life easier.

Here’s the lowdown:

- Open-Source: Think of it as a community project. People from all walks of tech life contribute to it, making it better and more powerful.

- Software Development Kit (SDK): Fancy term, right? But all it means is that it’s a set of tools for building software. Imagine it as your AI Lego set.

- Agents: Nope, not James Bond. These are like your personal AI sidekicks. They’re here to assist you, not save the world (although that would be cool).

A Quick History Lesson

About a year ago, Semantic Kernel stepped onto the stage. Since then, it’s been striding confidently, like a seasoned performer. Here are some backstage highlights:

- GitHub Stardom: On March 17th, 2023, it made its grand entrance on GitHub. And guess what? It got more than 17,000 stars! (Around 18.2. right now) That’s like being the coolest kid in the coding playground.

- Downloads Galore: The C# kernel (don’t worry, we’ll explain what that is) had 1000000+ NuGet downloads. It’s like everyone wanted a piece of the action.

- VS Code Extension: Over 25,000 downloads! Imagine it as a magical wand for your code editor.

And hey, the .Net kernel even threw a party—it reached a 1.0 release! The Python and Java kernels are close behind with their 1.0 Release Candidates. It’s like they’re all graduating from AI university.

Why Should You Care?

Now, here’s the fun part. Why should you, someone with a lifetime of wisdom and curiosity, care about this?

- Microsoft Magic: Semantic Kernel loves hanging out with Microsoft products. It’s like they’re best buddies. So, when you use it, you get to tap into the power of Microsoft’s tech universe. Fancy, right? Learn more

- No Code Rewrite Drama: Imagine you have a favorite recipe (let’s say it’s your grandma’s chocolate chip cookies). Now, imagine you want to share it with everyone. Semantic Kernel lets you do that without rewriting the whole recipe. You just add a sprinkle of AI magic! Check it out

- LangChain vs. Semantic Kernel: These two are like rival chefs. Both want to cook up AI goodness. But while LangChain (built around Python and JavaScript) comes with a full spice rack of tools, Semantic Kernel is more like a secret ingredient. It’s lightweight and includes not just Python but also C#. Plus, it’s like the Assistant API—no need to fuss over memory and context windows. Just cook and serve!

So, my fabulous friend, whether you’re a seasoned developer or just dipping your toes into the AI pool, Semantic Kernel has your back. It’s like having a friendly AI mentor who whispers, “You got this!” And with its growing community and constant updates, Semantic Kernel is leading the way in AI development.

Remember, you don’t need a PhD in computer science to explore this—it’s all about curiosity, creativity, and a dash of Semantic Kernel magic. ?✨

Ready to dive in? Check out the Semantic Kernel GitHub repository for the latest updates